Technicalities Behind Image Relighting

September 6, 2022

Onur Tasar

Machine Learning Scientist

Clipdrop Image Relighting App

We have recently introduced 💡 our image relighting AI application 💡 allowing you to apply professional lights to your images 📸 in real time ⚡️. With this app, you can turn your image into a magic with no background in professional digital photography.

|  |

In this blog, we will be explaining the technical details behind the app.

The Key Components: Depth Map & Surface Normals

In order to add new light sources and relight the image in a visually appealing way, the illumination needs to be physically (almost) correct. For example, the image below (obtained by our relight app) shows how the image should look when we add a light source on the right. As can be seen, one half of the face is darker, since the other half blocks the light rays coming from the new light source.

|  |

One way to achieve the physically correct relighting is to make use of depth maps and surface normals.

What are Depth and Surface Normal Maps?

The depth map is a gray-scale image that contains the information of how far or how near each pixel in the image is. The middle image below shows the depth map predicted by Clipdrop Depth Estimation Model, where bright and dark pixels respectively represent near and far points in the image.

The surface normal map is an image that encodes the normal vector of each pixel in R (red), G (green), B (blue) channels. The surface normals are crucial to determine how the image pixels should to be enlightened. For example, an image pixel having a normal vector parallel to the light ray receives no light, whereas a pixel with a normal vector perpendicular to the light ray receives strong light. More details on normal mapping can be found on the wikipedia page. Below you can see the input image and the surface normal map predicted by Clipdrop Surface Normal Estimation Model.

|  |  |

Once highly accurate depth and surface normal maps are predicted, they can be used to compute the illumination by common reflection models such as Lambertian or Phong.

Sounds Cool, How to Predict Depth and Surface Normal Maps?

The main challenge here is predicting high quality depth and surface normal maps from a single image, which is referred to as monocular depth & normal estimation in the literature.

A line of great research has been recently conducted to tackle these specific problems. MiDaS and its variant are the two state-of-the-art methods for monocular depth estimation. EPFL-VILAB has also recently introduced their monocular depth and normal prediction models trained on their omnidata dataset.

One way for depth & normals prediction is to use the existing methods.

How About Synthetic Data?

While manual annotation for certain fundamental machine learning / computer vision tasks such as image classification, segmentation, etc. is relatively easy, collecting highly accurate annotations for very specific tasks like monocular depth and normal estimation is a big hassle.

Synthetic data is another alternative for training machine learning models, especially when tackling the problems for which collecting annotations is extremely demanding. At Clipdrop, we extensively use synthetic data to solve challenging AI problems.

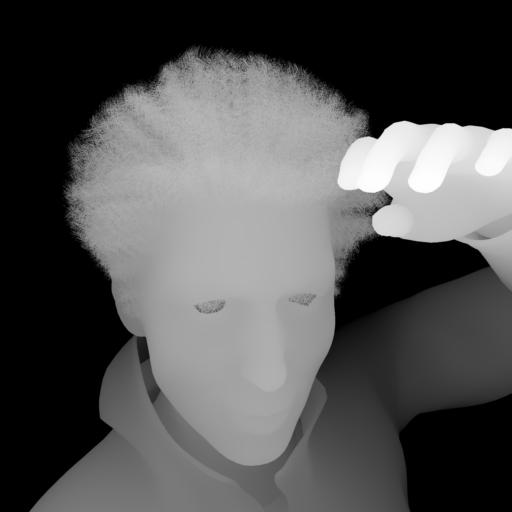

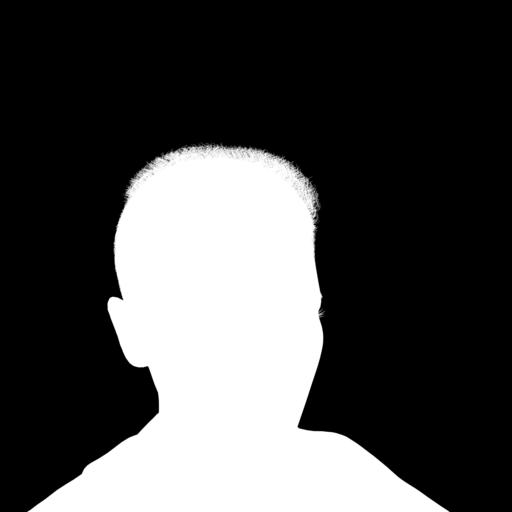

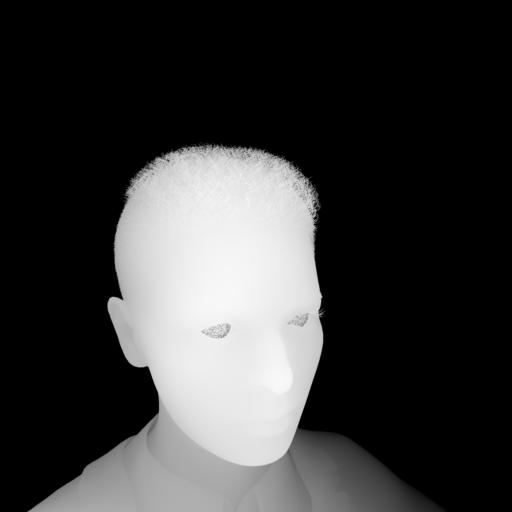

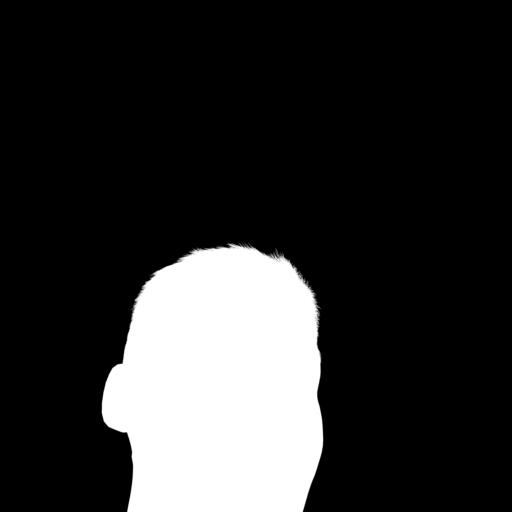

We have recently built a human dataset containing thousands of diverse human models with a high variety of clothes, poses, body types, facial expressions, facial & body hair, and many more. We also have hundreds of both indoor and outdoor environments with different lighting and weather conditions. With our custom data generation pipeline, we rendered person images as well as their masks and depth & surface normal maps.

These are some examples from our dataset.

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

Comparisons

In this section, we compare our custom and highly optimized models trained on our synthetic set with the current state-of-the-art methods. We compare the models on some images from unsplash.

Depth Estimation

We compare our monocular depth estimation model with MiDaS. Here are the predictions by these two models:

|  |  |

|  |  |

|  |  |

Surface Normal Prediction

We compare our custom surface normal estimation model with the model trained on Omnidata dataset.

|  |  |

|  |  |

|  |  |

Try Our Image Relighting App

Did you enjoy reading this blog? Check out our blog on our image relighting application.

Try our image religting application. It is free! No account or credit card is needed.

Do not hesitate to share with us any creative visuals you may have. Follow us on Twitter for the latest updates.

If you have any question, feel free to join our Slack Community or contact us.